For those who have been following how Natural Language Processing evolved since 2017/2018, Transformer/ BERT is not a stranger to you. As there have been a lot of great detailed introductions written by Alexander Rush, Jay Alammar and recently Peter Bloem, I don’t intend to cover what’s under the hood; instead I am searching for the first principle and trying to make an analog with something that is easier to be understood — a database and memory.

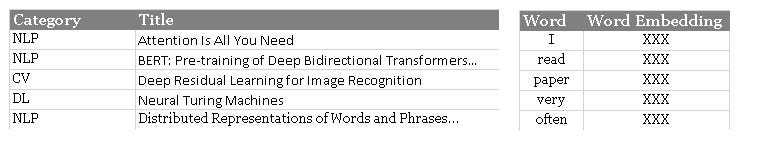

If you could understand SQL query, probably you can get the essence of the Transformer Architecture. Even if you are not, this should not be hard to understand — I am just extracting the author and title of all NLP papers from my “paperbase”.

Select Author, Title from Paperbase where Category = “NLP”

The query to database has to flexibly accommodate all sorts of keys (Category = “NLP”)and values (Author and Title). At the same time, the key and value have to flexibly serve whatever query a user is making. Behind SQL, it’s governed by a data schema the share across query, key and value. A valid record contains all items so that they could be used interchangeably.

Both are Queries in Nature

At the time the query is passed to the computer, it’s a two-step process:

This is surprisingly very similar to Attention Mechanism, the backbone of the Transformer:

There are two highlights I want to make:

I have drawn an analogy between SQL and Attention Mechanism.

Queries Enhance Understanding

Another good thing about attention mechanism is that we implicitly ask the machine to write the query, to use the returned result, and to integrate different returned result to form another meaningful query and result, etc.

At the beginning, if I don’t understand what CV is, I will query “CV” and all the computer vision papers will be returned so I know CV stands for computer vision. Reciprocally, if we don’t understand what a paper talks about, by querying the Title and returning Category, I know it’s a paper about NLP. Through iterative, if not exhaustive, query, we are understanding the records better via building association between records.

Likewise in Transformer, we are querying every record, so that each record’s (word’s) association across all other records (words) will be returned. For example, if I query “paper” probabilistically, it may give me back a probabilistic estimate with “read” being the most probable. Through exhaustive query of all words, the machine can develop understanding and relationship across all words.

A Transformer has multiple heads of attention, and stacks attention over attention, and so you can imagine that Transformer is like groups of smart analysts who collaboratively uses advanced semantic SQL iteratively to dig out insight from a super large database; when multiple middle level managers receive the insight from their direct reports, they present the finding to their managers (tougher than dual reporting), who ultimately distill so before passing to the CEO.

From Transformer to BERT and GPT

The famous BERT and GPT are using the Transformer architecture to capture and store the semantic relationship among words from very large corpus. Basically, most other state-of-the-art language models are based on Transformer because this database-like structure allows better retrieval and therefore storage of more complicated relationship in bigger amount of data like language.

And this database is transferable, you can think of any pre-trained model as compressed training data. Indeed, exploring these pre-trained models becomes almost the de facto exercise for most practitioners.

It’s All About Query and Retrieval

A good memory (aka database) is essential to intelligence. Every thought you have is actually making a query to your brain database which then returns relevant language, image and knowledge. It is not possible without effective query and retrieval. As a result, finding an efficient data structure for better query and retrieval is always the focus of AI research, and that’s why we got more and more novel deep learning architectures to play around!

<hr><p>If you know SQL, you probably understand Transformer, BERT and GPT. was originally published in TDS Archive on Medium, where people are continuing the conversation by highlighting and responding to this story.</p>